Object-Based Mixing in Spatial Audio Music Production: A Comparison with Stereo

Content

A guest article by Daniela Rieger

“While an engineer familiar with the complications of sound reproduction may be amazed at the tens of thousands of trouble-free performances given daily, the public takes our efforts for granted and sees nothing remarkable about it.[…].

The public has to hear the difference and then be thrilled by it […]. Improvements perceptible only through direct A-B comparisons have little box-office value.”

(Garity & Hawkins, 1941)

Object-based mixing offers a new approach to the audio mix that enhances the listening experience by allowing audio professionals to manipulate individual audio elements, enabling viewers to adjust their sound experience based on personal preferences and listening environments.

The appeal of 3D audio

3D Audio or immersive audio undoubtedly has an appeal – perhaps even more so to sound creators than to consumers. This is also reflected by a large number of papers on “3D audio” at audio conferences in recent years (Tonmeistertagung 2018, Prolight+Sound 2019, AES Virtual Vienna 2020, TMT 2021).

3D audio brings with it a new complexity – codecs and formats, a wide variety of technologies and software, new, creative design possibilities, expanded playback systems, and immersive sound that enhances the three-dimensional listening experience. But it is this complexity that risks taking the focus away from the end consumer.

As the two Fantasound developers William E. Garity and John N.A. Hawkins noted in 1941, no matter how complicated and complex technology is:

Consumers are largely not interested in all the hurdles that have been overcome or all the technology behind it. They want to hear the content as uncomplicated as possible and experience added value.

This is the only way a new technology can work – the only way to generate enthusiasm and interest. That’s the only way the new format will be consumed and the only way it can catch on. Garity and Hawkins also found this out almost 80 years ago, and it has not lost any of its relevance today.

The greatest appeal is offered when technology can be used creatively. Only then can a “sweet spot” be found that really ignites the innovative and new potential. So can immersive Spatial Audio music production really stand a chance against decades-old stereo workflows?

Immersive audio past and present

3D audio has been established in the film industry for some time now (Dolby Atmos, DTS:X, Auro-3D), and there are already several thousand cinema films in these formats. The availability of (channel-based) 3D music productions, however, is still quite limited.

Object-based immersive music was first introduced at the end of 2019, when the two technologies Dolby Atmos Music and 360 Reality Audio were introduced. Strictly speaking, only 360 Reality Audio is a purely object-based technology, Dolby Atmos is “hybrid” (using the channel bed). Audio objects allow for more flexible and personalized sound experiences by enabling sound to be placed anywhere within a 3D sound space using metadata..

There have been several experiments before, but they enjoyed an absolute niche existence. The sticking point was and still is that very few consumers have the necessary number of loudspeakers at home. This is where Soundbars and Smartspeakers will become more and more suitable.

Therefore, a format was needed that is speaker- and thus channel-independent. The keyword: object-based production. Thus, 360 Reality Audio and Dolby Atmos Music are just bringing a breath of fresh air to an old problem.

Note: “object-based” is not to be equated exclusively with “3D”, “immersive” “spatial audio”. In principle, object-based and mono can also be produced. In the following, however, it is assumed that object-based music production is immersive.

Will 3D music suffer the same fate as quadraphony?

As with quadraphony from the 1960s and the subsequent introduction of 5.1 surround – neither of which succeeded commercially – object-based formats are new technologies for the production, distribution and playback of music.

Unlike back then, however, the starting position today is different: While 5.1 Surround was aimed at a fixed loudspeaker layout and distribution via physical media due to the channel-based production method, channel-based audio formats like 5.1 surround were limited by fixed speaker layouts. Object-based audio can be flexibly rendered to different playback systems (loudspeakers and headphones) and distributed via music streaming services.

Apart from the aforementioned necessary playback situation at home, the chicken-and-egg problem came to the fore again. As long as there was no good surround content, the motivation for the investment was also low. Conversely, sound producers had to be convinced to produce in a format that could not be reproduced at all by the majority of users.

Production workflows: Established for decades

Since the first attempts at sound recording towards the end of the 19th century, the field of “music” has been constantly changing and subject to continuous development:

- Recording and production techniques (mono, stereo, 5.1 surround, 3D)

- Distribution methods (record, CD, digital, streaming services)

- Playback options (record player, radio, loudspeakers, headphones, soundbar).

Thus, over the years, many individual production steps have been established in music production, depending on genre and context, and uniform conventions have been consolidated on the distribution and playback sides.

This process of developing a workflow is still in its infancy for object-based audio in general and object-based music production in particular. Audio objects enable more flexible and creative production workflows, with different terminology, formats, and codecs affecting all steps of the production chain from mixing to playback.

New requirements for 3D music

Due to the diverse types of music distribution (record, CD, Blu-ray, digital portals such as iTunes or Soundcloud, streaming services), new requirements arise, especially with regard to possible format-agnostic production methods. Object-based audio formats treat each sound source as an independent object with unique metadata, allowing dynamic rendering based on listener position and room acoustics. So various challenges await all those involved in the production. Suddenly, mixing decisions that we have made almost automatically throughout our audio lives must be fundamentally questioned.

Type of object-based mixing production

One aspect is the type of production – currently, in addition to conventional stereo mixes, production is also channel-based, scene-based and object-based, immersive as well as purely binaural. Object-based production allows for more personalized and immersive audio mixes, enhancing audio delivery in various formats by enabling individual adjustments such as changing volume levels or selecting different audio elements based on the listener’s environment. This multiplicity of production modes, based on different codecs and involving different final formats and playback requirements, creates confusion and potentially prevents production resources from being expended.

Ideally, in the future, production would only be object-based: There would thus be one format that can be played back on every device in the best possible way – and all this with the use of only one master file. This is different from classical surround productions, where downmixes and upxmixes are necessary for cinema or TV.

Nevertheless, even object-based formats such as Dolby Atmos or 360RA have their limits. Be it because – at least currently – the production effort is higher than in stereo. Or the applications cannot easily be categorised as “music” or “film” entertainment, and special production requirements apply that are not supported by the currently available object-based technologies. For the 3D audio world is diverse, as this overview shows.

Similar to quadraphony in the 1960s-1980s, the various formats and codecs carry the risk that none of them will catch on. Nevertheless, there is a difference here: While with the quad format the problem was more on the side of the end user (no need to buy the speakers for quad playback), with object-based content the problems are more on the side of the sound creators and technology itself.

Why is object-based music production so different from stereo music production?

Currently, object-based music production means production for a specific codec. This is very different from stereo music production, which is codec agnostic. This means that production is not for a specific technology, but the end product can be used flexibly.

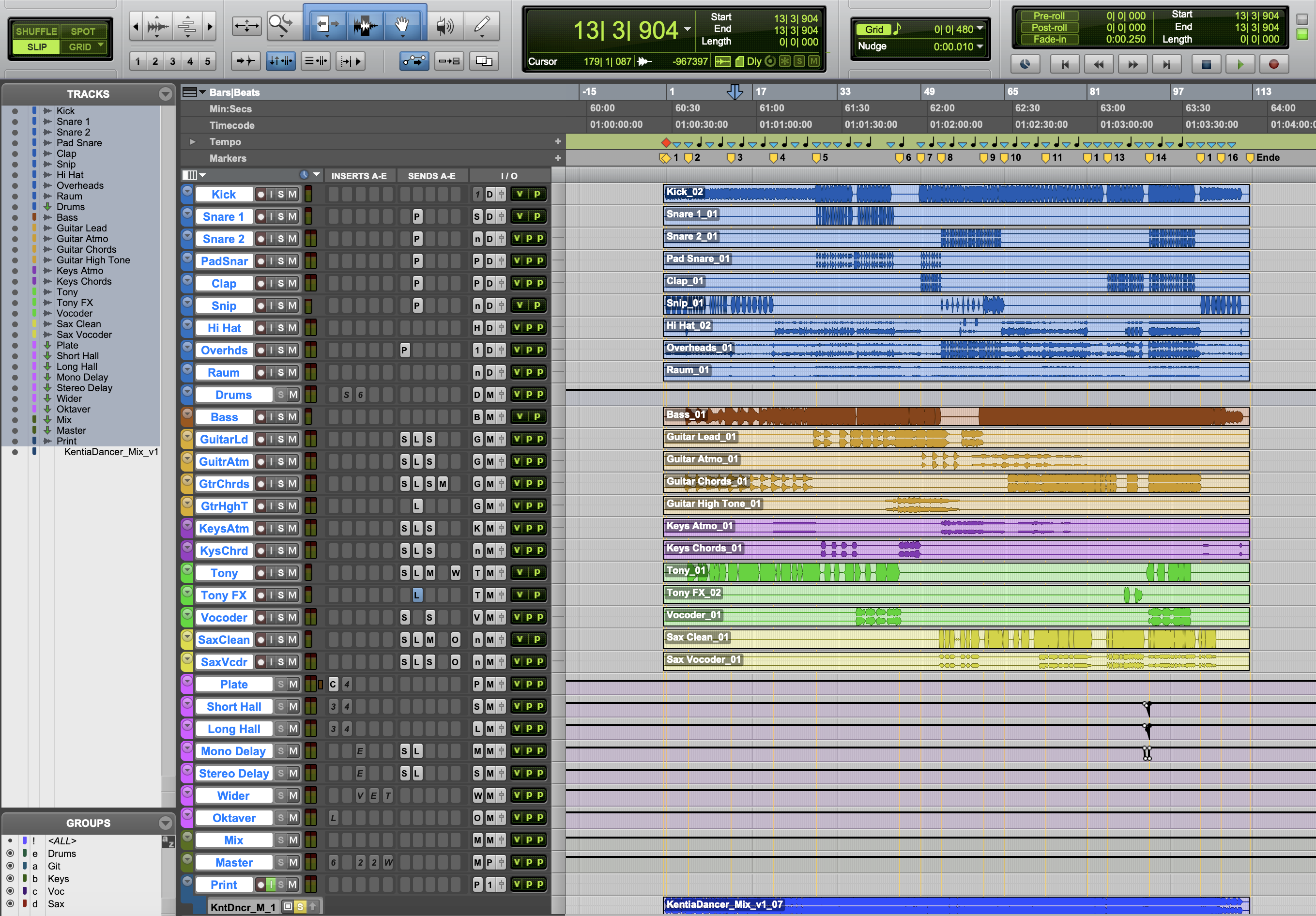

In object-based production, each sound source is treated as an independent audio object with metadata such as location, volume, and direction. This allows for dynamic rendering of audio based on various factors like speaker layout and listener position, thus delivering a more immersive and personalized listening experience. Conventional production workflows are taken up and developed further. Both object-based technologies can be integrated into conventional production chains, but deviate from established stereo production processes from the step of using the object-based software up to playback.

The comparison of these two object-based formats has shown that there are already major differences here, especially in the export, encoding, distribution and playback processes. In order for object-based immersive productions to hold their own, it is necessary to develop more uniform processes in all areas of the production chain. But as I said, it is usually the content itself that decides which format is most suitable. A one-stop solution is not yet in sight.

Current state of object-based workflows

In object-based workflows, sound objects are treated as individual elements that can be manipulated independently. The playback system interprets metadata to position these audio elements within a three-dimensional space, ensuring precise sound placement regardless of the specific speaker configuration. This allows for a more immersive and flexible audio experience, particularly with technologies like Dolby Atmos.

How can object-based music production become more attractive?

Unless one format will prevail, at least the aspect of combined production methods and conversion of different formats must come to the fore: Going through the complete production chains for several formats for one album is time-consuming and costly.

A quality sound system enhances the immersive audio experience, making it crucial to consider how these systems will play back the final product. When using the respective production software, it became clear that already from this step onwards the workflow is strongly dependent on and dictated by technical specifications, starting with the use of the production DAW.

Furthermore, it requires the development of plug-ins that are specifically designed for use in object-based and immersive mixes (for example, reverb, compression or mastering plug-ins that can work with three-dimensional spatiality as well as with objects).

Mastering

Furthermore, the entire mastering step must be adapted for object-based mixes – especially in this case, it is necessary to establish procedures that can be enforced across formats.

This also includes integrating the new workflow step of authoring (the generation and verification of metadata) into the mastering process. Object-based audio allows for the same sound to be manipulated differently compared to traditional methods, either by panning it through different speakers or associating it with spatial coordinates using metadata.

Further development of spatial audio technical requirements

Consistent implementation of technical requirements is essential to improve both production processes and playback capabilities. Comprehensive loudness measurement and normalisation must be established for object-based formats as well as for conventional stereo productions in order to prevent the outbreak of a new “loudness war”.

Furthermore, the object-based audio content could be played back on a variety of playback devices, but incorporating more speakers can significantly improve the spatial resolution of sound, enhancing the immersive audio experience. However, there is still a lack of consistent implementation of the technical requirements in the end devices.

Financial aspects

Last but not least, the financial aspect plays a role – while stereo productions offer a variety of exploitation channels (record, CD, Blu-ray, digital portals such as iTunes or Soundcloud, streaming services) and are thus broadly positioned from an economic point of view, this is currently limited to a few streaming services and a few Blu-rays for object-based productions.

Surround sound technologies like Dolby Atmos have economic potential, as they utilize object-based mixing to create immersive listening experiences, transforming the way audio is produced and consumed.

Finally, as with any new technology, demand will determine commercial success. Through the integration of Dolby Atmos Music and 360 RA in streaming services, object-based content is presented to the general public.

If this offer is accepted by the masses, the demand for productions and the development and optimization of special software and hardware will increase – and with it the demand for more uniform workflows for the production of object-based music.

Interested in producing object-based audio? Click here for the contact page!

Contact Martin