Object-based audio for 3D music production: stereo workflow comparison

Content

Guest post on object-based audio for music production in 3D vs. stereo by Daniela Rieger.

„Although most engineers ultimately rely on their intuition when doing a mix, they do consciously or unconsciously follow certain mixing procedures“ (Owsinski, B. (2006). The Mixing Engineer’s Handbook)

Established stereo music production workflow

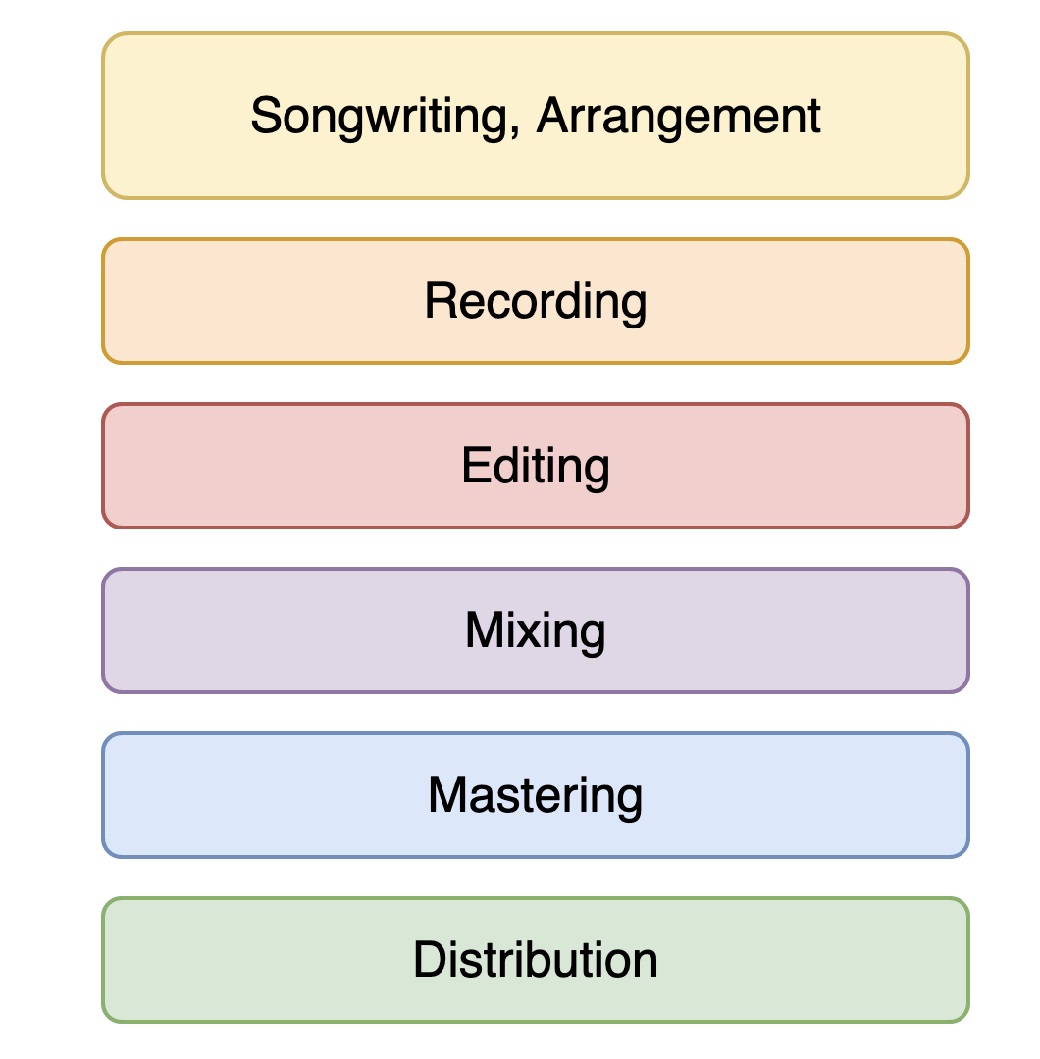

Each sound creator has individual workflows due to experience, preferences and a variety of other influences. These cause numerous subconscious, time and material related decisions. Nevertheless, there is an intersection of generally valid procedures. A common stereo pop music production chain might look roughly like this:

Based on this “general” workflow, parallels have been shown in the practical application of Dolby Atmos Music and 360 Reality Audio. The workflow for object-based audio may well resemble the stereo workflow in terms of production and distribution, as well as recording, editing and part of the mixing.

A distinction is made from the point at which the object-based audio software is integrated. This means that as soon as, for example, Dolby Atmos Production Suite or 360 WalkMix Creator (formerly 360 Reality Audio Creative Suite) are used in the production environment, new workflows emerge – with new challenges, and new possibilities.

Comparison of production methods between stereo and object-based audio

Before we now take a closer look at the two workflows, it is important at this point to highlight the relevance of the distinction between the audio terms “immersive” or “3D” and “object-based”.

Difference between “3D” and “object-based”

The workflow distinction between object-based productions and stereo productions occurs, as described above, only with the integration of the corresponding software. An immersive production workflow, on the other hand, plays a role earlier:

During recording, a different spatiality can be created by considering 3D recording techniques. This can later be enhanced by using special 3D plug-ins for reverb, delay, compression and effects.

Since not every immersive production is object-based, but most object-based audio productions are immersive, the latter assumption will be made throughout the rest of this article for the sake of simplicity.

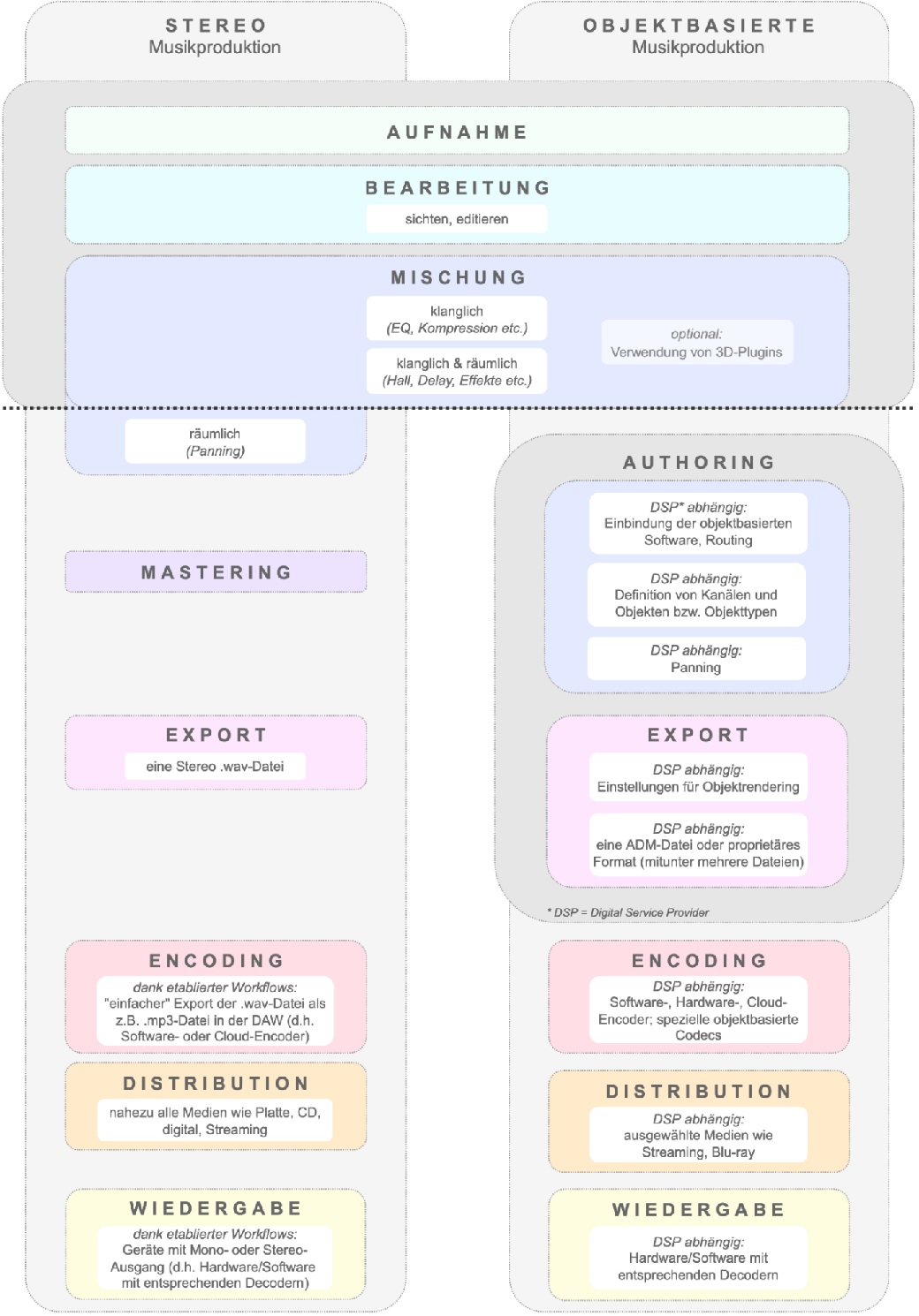

Graphical comparison

In the graphic, it can be seen that there are definitely changes in the production workflow, but the basic steps remain very similar.

Especially in the “encoding” and “playback” steps, it becomes clear that the basic idea of how these processes work is the same. Both steps are based on the use of cloud, software or hardware encoders, and hardware or software decoders.

The biggest difference is simply that both encoders and decoders are strongly established and “common” for traditional channel-based music production – for NGA, all of this is currently in the making. It’s simply not as ubiquitous yet.

Starting point: stereo mix

The comparison shows: due to similarities at the beginning of the production workflow, it is also possible to start from a stereo mix into object-based audio production.

The delivery of a DAW project – as long as it is a DAW supported by object-based software – offers a possibility to keep creative decisions of the stereo mix, and to create an object-based audio mix based on it.

This is accomplished because the production software (Dolby Atmos Production Suite, 360 WalkMix Creator) can be integrated into the DAW projects already existing stereo or surround mixes.

The choice of DAW currently still influences the further approach to the production, especially with regard to the use of aux or audio tracks with larger channel configurations than 5.1 (for example, for the creation of multichannel bed or effects tracks). There are limitations here depending on the DAW, but these can be solved with workarounds or the use of special tools (example: SAD from NAT).

Innovations: Authoring metadata

Probably the most noticeable difference in the workflows comes from the new authoring step. This deals with the creation (for example, the settings for object rendering) and verification of metadata and brings several innovations.

The interaction of audio + metadata results in various advantages, the best-known keyword is certainly “flexible rendering”: the transfer of audio + metadata ensures that the mix on the end device is flexibly adapted to the existing setup. This also means that by using objects, not “only” a binaural headphone mix can be made, but this very mix can also be played back on soundbars or speakers due to the flexible rendering.

This flexible rendering means that in NGA (Next Generation Audio) monitoring output and export are not the same thing, as is known from channel-based productions.

Monitoring in the renderer (examples: Dolby Renderer, 360 WalkMix Plugin, Fraunhofer MHAPi, Auro Renderer,…) is a preview of what will happen in the final device. Through the monitoring selections in the tools (stereo, binaural, 5.1+4H etc.) different renderings can be previewed. However, this renderer setting and selection is completely independent of the export.

In addition, the way object-based audio works with metadata brings another new feature: different requirements for the mastering process.

Mastering audio objects

The major topic of “mastering” opens up new technical requirements for possible software developments. While conventional, channel-based mastering has become an established part of music production over the years, object-based audio mastering requires a new approach:

By not transmitting a final mix that plays back the same way to every consumer, but a combination of audio + metadata, traditional mastering techniques cannot be relied upon at the end of the production.

Audio objects rather than loudspeaker signals have to be mastered, as is the case with channel-based productions. Established conventions for this hardly exist at present.

In order to take a closer look at object-based audio mastering, this general step should be divided into several individual parts: Sound mastering (EQ, compression), album mastering, archiving and backups, and distribution preparations. Especially dynamics processing (compressor, expander, limiter) decides on the overall impression in music mixing.

Whereas channel-based mixes (such as stereo or 5.1 surround) often use bus compression to compress individual instruments, specific frequency ranges or the overall sound, this presents an increased difficulty in object-based productions, as the flexible rendering does not allow this.

In particular, the aspect of binaural rendering and associated sonic colorations (through the use of a wide variety of binaural renderers) poses a further challenge, as it is virtually impossible to influence this during mastering.

New playback options such as streaming also mean that the mastering process is changing: The step of merging different tracks as an album is sometimes relegated to the background, as music streaming is often track-based at the listener’s end rather than playback of a complete album. Thus, mastering is often done only for individual tracks, not for an entire album – the album mastering step is partially eliminated (except, of course, for albums that are produced completely immersively).

A new mastering step is also the verification of the authoring process (correct formats and metadata), archiving of object-based mixes (for example as ADM files) as well as encoding into the appropriate format and the associated preparation for all distribution and playback paths.

Dependence on 3D audio technology

When comparing both types of production, it is also striking how much the deviations from the conventional production workflow depend on the respective underlying object-based audio technology. One example of this is the encoding and resulting playback:

For stereo productions, a .wav file is usually exported and encoded as an AAC or mp3 file, for example (which, based on the widely installed decoders, can be played back almost anywhere). For object-based audio productions, currently either an ADM file with a special technology-dependent profile is created, or a proprietary format is exported, which is then partially encoded into different formats in the encoding process.

This allows the file to be played back on different devices. This highlights one of the innovations of object-based audio: In different steps of the workflow, special formats that are not (yet) compatible are used. The decision process for one of the formats is much earlier in the object-based workflow than in the conventional stereo or 5.1 surround production chain. However, conversion tools can help here and transfer productions from one format to another.

Objects in music production

The importance that audio objects can have specifically in music production is linked to the production type and music genre. This is because it has a similar effect on the object-based production workflow as it does on that of a stereo production.

On the one hand, a distinction must be made between live productions and studio productions. In a jazz or classical mix (as well as in live productions), the sound body in the room (and thus the recording technique) is of particular importance.

In contrast, in an electronic (as well as studio-based) production, the design and combination of recorded and synthetically generated sound elements plays a greater role.

Live production, jazz and classical music

Especially when recording a body of sound in space, crosstalk occurs between individual instruments, which must be taken into account in the mix (delay compensation), and can lead to problems when using instruments as individual objects.

In terms of object-based audio production methods, this means that the focus can shift depending on the genre: In the case of a classical music production, it lends itself to a precise localization of individual objects in particular, as well as the aspect of immersion and spatiality: the ability to map the entire room, including the height dimension, means that the acoustics of different concert halls can be represented.

In combination with flexible playback rendering (for example, binauralization), this means that more realistic listening experiences can be simulated for the listener. Possible user interactivity during playback can also contribute to this.

User interactivity is another feature of object-based audio such as the MPEG-H 3D audio codec. In the authoring process, various presets can be specified that use metadata to define whether and to what extent users can interact with the material. In the music area, examples would be a selection of the listener’s position in the room (stalls, 1st rank, conductor’s position) or a “play-along” preset in which the solo voice is deactivated and users could thus play along freely.

Studio production (pop, electro, or similar).

In “abstract” (for example, electronic) productions, on the other hand, the sound elements, which are usually individually present, recorded or synthetically generated, can be staged as moving objects, thus creating an atmospheric soundscape.

In the case of electronic music, it is also particularly useful to create a three-dimensional spatiality through creative object panning (used in moderation). Here – in contrast to classical music – there is no need to pay attention to correct and realistic spatial positioning and reproduction.

Different genres can thus benefit from different aspects of object-based audio – although the focus should always be on the listener.

Are stereo habits transferable to 3D mixes?

In the classical field in particular, there are traditional and established listening habits that should also be considered, at least to some extent, in object-based immersive productions.

Techniques that have proven themselves in stereo productions also apply to object-based immersive mixes. 3D audio is an extension of stereo. Established production workflows are thus still relevant and can be enriched by new production techniques and possibilities.

In conclusion, the immersive aspect plays a particularly important role in object-based music production. At the same time, this can also lead to increased emotionality.

On the other hand, flexible playback rendering means that consumers can listen to the productions created via headphones, soundbars or loudspeaker systems. Personalization options are available and thus there is added value compared to immersive mixes produced purely binaurally or channel-based.

Conclusion

In conclusion, it will be some time before similar conventions and – at least partially – standardized workflows become established in the field of object-based, immersive music productions.

However, the familiar production workflows of stereo music production do not have to be fundamentally changed, but continue to serve as a basis on which new processes can be built.

Object-based audio brings with it many new features, from user interactivity and new creative possibilities through three-dimensional space to flexible rendering. In music production, the latter two points in particular play a role.

In contrast to conventional 2-channel stereo music production, encoding and decoding processes in particular are not yet commonplace, and thus take on a more complex role in the workflow.

However, as with other Next Generation Audio topics, we are currently experiencing the “innovation process” of the new technologies live.

So now is a good opportunity for all audio professionals interested in object-based music production to get involved in these new workflows right from the start! So if you want to be part of it and need help getting started, just drop me a mail!

Get in contact