Personalized Spatial Audio – The holy grail called HRTF

Content

I always wanted to write an article about personalized spatial audio. But honestly, the topic is so nerdy that I didn’t feel it is too relevant. Also, I do have a specific opinion on that, but later more on the pros and cons.

One of the reasons is that when you want to talk about personalized spatial audio, you don’t come around to one thing: HRTF. Known as a head-related transfer function. Sounds like a complicated definition. Well, and it is!

But bear with me. Apple just announced personalized spatial audio. So now, taking an image of your ears becomes cool. Don’t believe me? It’s already a thing!

Update:

- 22/09: The new iOS 16 now has the feature. At the bottom of the article, there is more information on how Apple has approached the topic of personalized 3D audio.

- 22/09: Immerse Gaming | Logitech is the spatial audio software that brings camera-based head-tracking to any Logitech G and Astro headset. This includes old and new hardward alike. With AI-driven Personalized HRTF, low latency head tracking with any webcam. Also the ability to stream spatial audio on Twitch, Streamlabs and more.

How does personalized spatial audio work?

Every person has a different pair of ears. Especially the pinna, also called auricle is responsible for how we experience the sound of our environment. Of course, there are also a lot of other things to consider being measured. Just like the head size, shoulder, or even the inner ear canal.

So a bit like a fingerprint, that only occurs once, we all hear the world a bit differently. This is not too relevant when talking about normal audio technology. Just like loudspeakers or headphones. But with spatial audio, we suddenly need to consider this tiny difference we all have.

How do we hear spatially?

There are three main factors relevant for us the be able to perceive sound as 3d audio. They are:

- ITD: Interaural Time Difference

- ILD: Interaural Level Difference

- HRTF: Head-related transfer function

Sounds super abstract, but let me give you an easy example. Imagine you hear a car coming from the left. What happens for the acoustics is the following:

- ITD: The sonic waves reach the left ear before the right ear. Because our left ear in this example is closer to the car, right?

- ILD: The sound is also louder on the left ear. This is because our head is a bit like a wall for our right ear in this example.

- HRTF: Also because of our head, our brain would measure different frequency curves from the left and right ears. Long story short. With our two ears, our brain can distinguish sound differences between left and right. We have learned all our life what this difference sounds like. Therefore we can localize sound sources in space. Like a knock at the door, people talking to use or the car for this example.

So far there wasn’t really a need to have this sound personalized. Because all the examples I just mentioned work for loudspeakers or natural sounds in our environment. But what if we want to replicate 3d audio on headphones?

What is binaural audio?

Welcome to the world of binaural audio! It’s basically a special version of stereo. Meaning we also use just two channels of audio. Since we also have just two ears, this technique works. But only for headphones, the sound must hit the ears directly. There mustn’t be any space in between like we have with loudspeakers.

Hey but what about Surround? Where do you need multichannel audio like 5.1.? On loudspeaker yes we do need more audio channels! But there is no need to personalize the sound since the personalization is already happening in our ears. Even when there are multiple people inside a room, we hear the sound independently.

How does spatial audio work for headphones?

If we want to transport that surround sound experience on headphones, we need some rendering magic to happen. And this is exactly what Apple has been doing with Dolby Atmos Music for instance. Or Dolby Atmos just for films. It takes the spatial audio signal – which has all the threedimensional audio data – and renders it to two channels of audio. The result is binaural audio specifically for headphones. Some also call it headphone 3D.

Click here to read why Apple Music with Dolby Atmos sounds bad.

To make this work, we need the HRTF. You can think of the processing as follows: The playback device – which can be an app, iPhone or TV – reads the audio information. With the metadata, the decoder knows, where the audio objects are placed in space. Now the render calculates

“OK, so how would the audio objects sound like for a human being?”

So with these complicated filter curves, the audio objects suddenly sound on headphones as if it is coming from behind for instance. This is what classical stereo cannot do. We just have left and right. Also we’d localize sound as an in-head-localization because our brain is lacking information.

Just with this HRTF rendering our brain has the necessary dataset to understand where the sound is coming from in space.

Why personalized audio is a game-changer

As mentioned, there is a lot of real-time calculation happening for spatial audio to happen. But in order to generate all the data, the renderer is using 3d model of a head. A bit like a dummy head microphone, but virtually.

Unfortunately, all the data is just based on average values. So the software engineers basically measured a lot of human heads and ears. Then they built a model that would match as many people as possible.

So chances are good that the spatial audio experience you have with Apple works for you. But if you have “a special head” the 3d sound thing never really worked for you. Because your physical ears are too different from the numbers that the rendering is using.

How can I access personalized spatial audio?

For this, we need the right content. So once again, immersive content is called for, which I constantly report on in my blog here.The good news is, there is plenty. Basically, any multichannel audio content can be transformed into personalized sound such as:

- surround sound from movies (Auro 3D, Dolby Atmos Music, MPEG-H)

- ambisonics from 360 videos (YouTubeVR, Facebook360)

- interactive audio from games or VR (Unity, Unreal, Stem, Playstation)

The personalized audio just needs to be implemented into a software solution before the sound really hits the headphone. You can pick any pair of headphones by the way. So no, no Apple Airpods are needed for personalized spatial audio. But they give you head-tacking which of course makes the immersive audio experience better.

What personalized Software Solutions are out there?

Of course, there is not just Apple. A lot of manufacturers have been working in the field, so here is an overview. I’ll add more text as soon as I get to it.

Genelec Aural ID, SOFA

Genelec, a loudspeaker manufacturer, was the first to develop a pipeline where you can simply take pictures and videos of your ears. You then sound the files to the company via the app and get a 3d model of your ears. This 3d model is a so-called sofa file that you can combine with plugins in your audio workstation.

Embody IMMERSE™

Embody made a good job of implementing personalized sounds for gaming. They offer a software solution that makes use of 5.1 surround sound, which most games use. So personalized 3D audio has already arrived in games.

It enables head-tracking via webcam and comes with presets that work best with Logitech headsets. It’s targeting streamers and content creators on Twitch on for instance.

But they don’t want to target gamers exclusively. For audio professionals, the technology was put into Steinberg’s digital audio workstation.

Dolby pHRTF

Dolby’s new tool allows sound creators to listen to Dolby Atmos content through headphones. With Dolby Atmos Personalized Rendering, creators can now develop spatial audio mixes over headphones. For the first time with Dolby Atmos, the listening experience is rendered using a personalized HRTF (Head Related Transfer Function).

THX Ltd. and VisiSonics Partner

THX has licensed certain 3D audio and personalization technologies from VisiSonics and is incorporating them into its products. VisiSonics technologies will be utilized in THX® Spatial Audio tools for game developers and music producers, and advance THX audio personalization for headset manufacturers.

Sony 360 Reality Audio headphones

With the headphones like the WH-1000XM4, XM5, and WF-1000XM4, Sony’s headphone app used does something similar. Here, you are asked to take photos of both ears. This optimizes the sound of the in-house spatial audio format, Sony 360 Reality Audio (360RA).

Click here to read more about the Sony 360RA experience.

Afaic Crystal River Engineering

They were probably the first to even think about this rocket science technology. Why rocket science? Well, convolvotron™ is a popular HRTF-based spatial audio system developed for NASA and is manufactured by Crystal River Engineering in the nineties. So read more about spacial audio (get it ?!) and personalized HRTF here.

Apple personalized audio

Since Apple is closely working with Dolby, chances are good there will be a collaboration also here. Information will follow after WWDC.

Apple plans to scan your ears to analyze their shape and use that data. So similar to the approach of Genelec, but iPhones do have Lidar technology which could be a benefit. They do have the TrueDepth camera systems with the selfie camera and infrared emitter.

A lot of Mac Tech review sites like these non-audio experts think this will just be a feature for newer Apple headphones. While I think this could just be a software update without any new hardware. But as we know the apple policy of making us buy new products … let’s see.

And here’s the update already. Contrary to what many self-proclaimed trade magazines claim, you don’t need the new Airpods Pro 2. I was able to successfully set up personalized 3D audio with my first generation Airpods Pro. The setup is self-explanatory, you just need to have your Airpods connected and go to the appropriate menu. More information is available on the Apple Support Page.

Which hardware do I need for Apple’s personalized Spatial Audio? To set up Personalized Spatial Audio, you need an iPhone with iOS 16 and the TrueDepth camera. After signing in with your Apple ID, you can use Personalized Spatial Audio on these devices:

- AirPods Pro (1st or 2nd generation), AirPods Max, AirPods (3rd generation) or Beats Fit Pro.

- Audio-visual content from a supported app.

- An iPhone or iPod touch running iOS 16 or later; an iPad running iPadOS 16.1 or later; an Apple Watch running watchOS 9 or later; an Apple TV running tvOS 16 or later; or a Mac computer running Apple Silicon and macOS Ventura.

Where can I find personalized 3D audio in settings?

-

- on your iPhone, go to Settings > [Your Spatial Audio-enabled device] > Personalized Spatial Audio > Set up Personalized Spatial Audio.

-

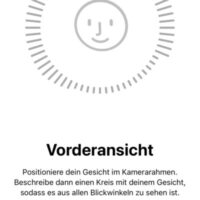

- To record the front view, hold your iPhone about 12 inches directly in front of you. Position your face in the camera frame, and then slowly move your head in a circle to show all angles of your face. Tap Next.

-

- To capture your right ear, hold your iPhone with your right hand. Move your right arm 45 degrees to the right, then slowly turn your head to the left. To capture a view of your left ear, take your iPhone in your left hand. Move your left arm 45 degrees to the left, and then slowly turn your head to the right. Audio and visual cues help you set up.

What you need to know about personalized spatial audio

So all in all, this technology will help you to localize 3d sounds better on headphones. It will give you an even better experience for any immersive media that was mixed with immersive audio workflows. It will just feel more natural. A bit like finding the perfect shoe to wear, where there is no one-size-fits-all solution.

When talking about personalization, I have to drop NGA (next-generation audio) with MPEG-H. Having a better localization for 3d audio objects is just one type of adjustment we can do. Imagine you could also change the duration or fix too loud music or yourself? Read more on that with NGA audio.

But what does all that mean? How can you create a creative sound experience with personalized sound? Don’t hesitate to ask, I got you covered!

Get in contactRelated Articles

3D Spatial Audio Apps - Apple AirPods Pro, Galaxy Buds Pro and more!

Apple Spatial Audio - Which device or tool gives you the 3D effect?

Hearables with Spatial Audio - and more smart Earbuds Technology

How do we localize sound for 3d binaural audio

Dynamic Head Tracking - Spatial Audio for 3D Surround Headphones