360° Heatmap in VR-Videos - Spatial vs. Static Sound

Content

One of the most important points to consider as a content producer for virtual reality videos is the question of where the viewer will end up looking when he or she has is wearing the VR headset. One of the most effective methods of influencing the view is to use 360° audio as a guide. At events and lectures, I see again and again – and I count myself among them: there are many assumptions, but whether something works and or not is rarely checked. What you would need is a series of experiments, comparing spatial audio with static sound and the extent to which the experimental groups differ – preferably with a 360 heatmap.

Research, I could never do alone. But fortunately, there is the Munich VR 360° Video Meetup. There I got to know Sylvia Rothe from http://rubin-film.de/ who is now doing her Ph.D. at LMU Munich. Short: She can do programming, I can do sound. Both of us are enthusiastic about VR and wanted to investigate exactly this problem.

Update 2019/06

The results of this study have now been released in an official framework. With the proceedings of the Tonmeistertagung the paper was published and is scientifically accepted. I am happy to have been able to make a small contribution to how sound is perceived in virtual reality 360° videos.

Crossing Borders – A Case Study

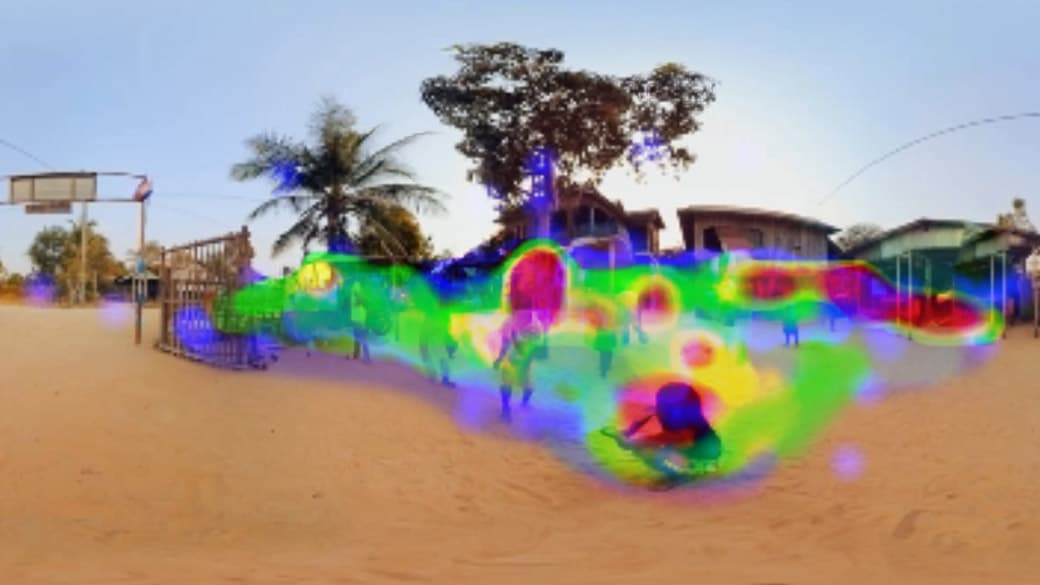

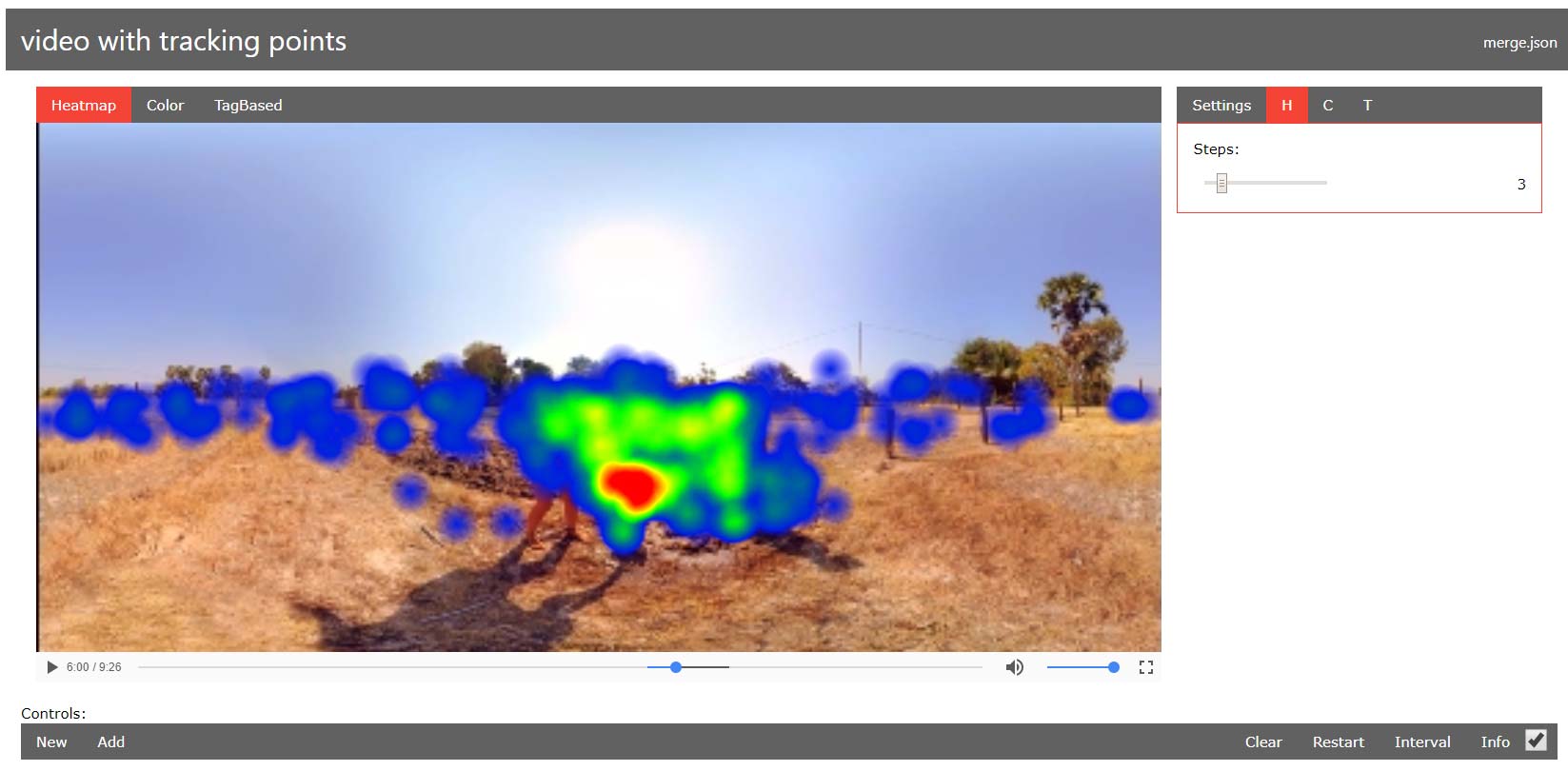

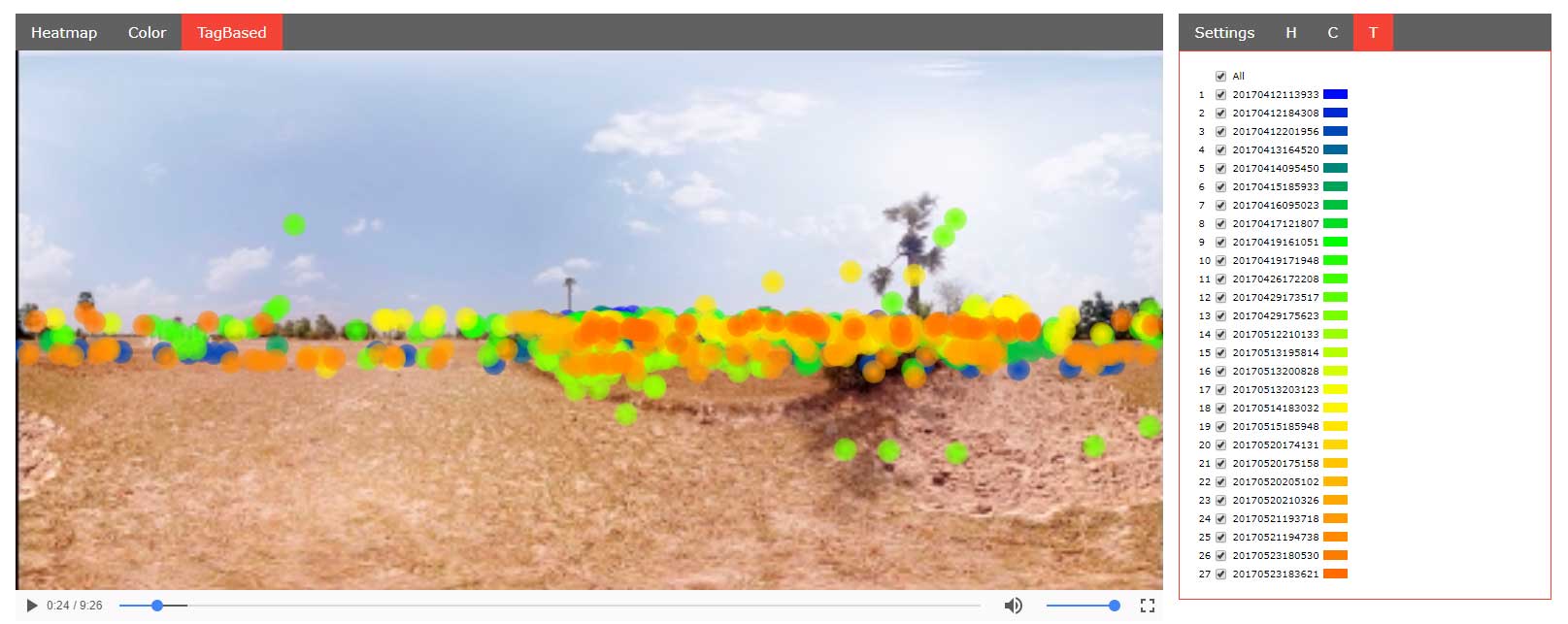

The source material is an episode of the 360° documentary Crossing Borders, about an orphanage in Cambodia, called CPOC. It contains many different sequences, such as interviews, a voice-over narrator, landscapes, people, animals, and motorcycles. The music was left out and muted to focus on the soundtrack. So a small app has been programmed in Unity, which stores the viewing direction as datasets so that they can later be collected and processed for the 360 heatmap. The whole thing once with sound head-tracking (Ambisonics , tbe-format) and once as a normal stereo (binaural down-mix from the 0° azimuth position, looking at the center of the equirectangular image). Here is a screenshot from the movie, in the pictures below the respective 360 heatmap.

Implementation of the set-up

The experimental setup was as follows:

- the subject takes a seat on a swivel chair

- the 9-minute clip is watched on the Samsung GearVR with headphones

- collect the gyroscope-data as .vtt-files from the Samsung Galaxy S7 to create the 360 heatmap afterward

- in addition, the most important findings were noted

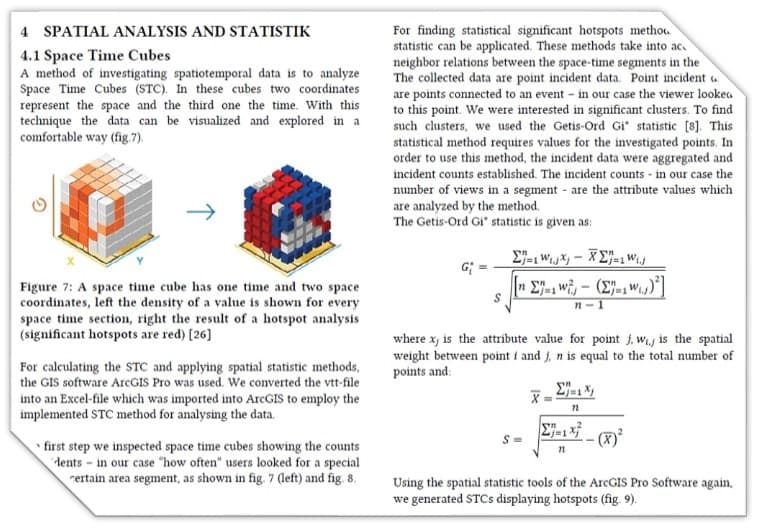

How exactly the heatmap was created is, to be honest, pretty nerdy. The short versions are so-called “space time cubes” and the “Getis-Ord Gi statistic”. Here is a short extract from the scientific paper.

Thus there were two user groups, one called “Spatial” and one called “Static”. Both saw the same video but heard a different sound.

- Static means that the image is rotating as known from VR, but the sound always remains the same, which is called head-locked and represents a downmix also named as static binaural stereo. So the soundtrack for every user of this group always remained identical and is not depending on the head movement of the viewer.

- Spatial means that the sound, just like the image, changes its direction through head-tracking, as it is in reality, i.e. is changed interactively by the user. Synonyms would be 3D audio, immersive sound, dynamic binaural stereo etc. The soundtrack was always perceived a bit different for each user. While the so-called 360° sound field was the same for each user, the direction was rendered in real-time depending head-movement and what the user was seeing, VR-sound as I’d call it here.

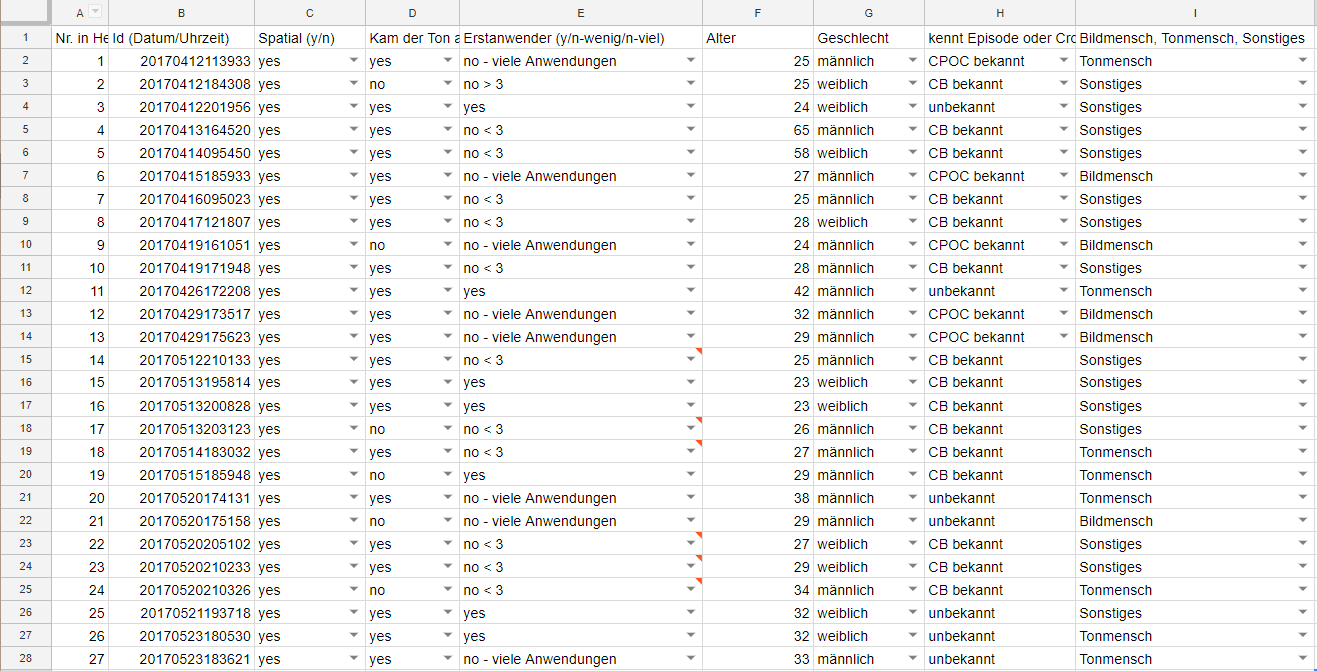

Up today, we were able to make about 20 evaluations per test group, so 50 in total. We specified age, gender, profession and how experienced the subject is with the medium of virtual reality. This is what the survey looked like:

The Results

Similarities

Let’s start with the results we could find in both groups.

- humans draw attention, non-humans don’t. This is easily comparable to real-life where we won’t stare at a wall, but have a look at our fellow human beings. Some buildings were not even looked at ones, which means they are objects that guide the viewer away from themselves and can be used to build a panoramic 360° scene visually like a theater stage.

- people mainly looked straightforward, hardly up and down more than 15° elevation and left/right more than 30° azimuth. This is because of the degree that a human can turn his head without moving the body. The content here is also responsible for it since the main action was playing in the front as well as our daily life, where the most interesting parts are horizontally and usually not above or below us, right?

- if you show a scene for the second time, in this case, an interviewee that was seen twice in the same setting, people feel comfortable looking away since they are already familiar with the surroundings.

- some sounds didn’t matter if they were even spatial or not, like traffic-noise. My advice would be: “make it believable, not realistic”. If someone hears traffic and sees cars, he doesn’t mind if the sound is spatial or not as long as the sound doesn’t come from the wrong direction; but with important elements like humans, they do, which I will come to later.

Differences

Now it gets (even more) exciting, here are some first, careful conclusions:

- viewers with the static sound turned away from the interviewee after around 8 seconds. With spatial sound, people stayed around 1 to 3 seconds longer and mostly until the end of the scene. My guess is that with spatial sound, people have a better feeling of consciousness for the other person. In real life, you wouldn’t turn away your head, if the person is talking to you. If so, you’d still hear him/her from the side, making you feel like he/she is still there and you are now being rude. With static sound, this effect seems to get lost and viewers turn away faster after having seen the protagonist.

- similarily, this is also applicable for details like animals, moving objects (motorbikes etc.). There was a scene with a pig, where group spatial just stayed there and kept watching it, while group non-spatial saw it and immediately started to look away again.

- Something the 360 heatmap showed in combination with the survey: the spatial sound group found the interviewee faster and were confusing it less with a voice-over. They could easily distinguish if a person was talking inside of a scene (spatial sound) or as a narrator voice-over (mono, in head localization). While group non-spatial has to hear both in mono and thus resulted in disorientation for several viewers.

- since people weren‘t told about the „why this study?“, even some sound guys couldn‘t recall if it was spatial or static sound. There seems to be a small gap between feeling natural and getting immersed or a sound experience that doesn’t match the rotation of the visuals and therefore destroys the immersion.

Learnings

- sometimes the HMD (head-mounted displays) with Samsung Galaxy S7 overheated since playing a 360° video with 360° sound and collecting gyroscope data can be intense

- the Samsung GearVR has no eye-tracking, we collected to center-position of the frame which still gave great results. I think we do a lot of unconscious, fast eye-movements when we see a new scene before we start moving your head to the center position which is what eye-tracking might reveal.

- the main action of the content (Crossing Borders 360° documentary) was at 0° azimuth, where we received most data. If the content would force the viewer to look around more and therefore triggering the viewer more with spatial sound, the results will be even more obvious.

- Also, the static stereo was a binaural downmix, which sounded identical with the spatial sound at 0°, so the mix was already fairly similar, just with-out head-tracking.

- additional causes that are very hard to detect are the content as stated, HRTF (head-related transfer function), subject’s experience with VR and psychoacoustic effects.

Conclusion

This was my first try to see, if my work as a VR-sound designer actually makes a difference and luckily, it does. There is so much more work to do, to indicate the effects of virtual reality sound on humans, but I hope this is helpful and I welcome further investigation! Immersive audio is nonetheless great and is just starting to get awareness in the public which is what I try to push by giving talks, workshops and offer to mentor for that.

YouTube Analytics VR 360 heatmap

Since people asked me, why I didn’t use YouTube’s 360 heatmap report, let me comment on that quick. YouTube has always been collecting a vast amount of data for the user’s YouTube channel: When, where, who, how long the video was watching, etc. Now, even more, is available for VR/360 content: Where did the YouTube viewers look? From the principle exactly what was described above, but there are some facts to be careful with:

Pro:

- You don’t have to program your own app and can easily collect data.

- The evaluation runs on its own and can proceed far more user activities than one could do alone by reaching more viewers.

Contra:

- The 360 heatmap in VR videos is only activated for the user after a certain number of views, about 1000+ in total.

- There is no experimental setup, with which each user is in a completely different usage environment or on different devices. If they were looking with a cardboard on the YouTube app, or if the smartphone was just lying on the table and not moved at all… it’s hard to find out.

Nevertheless, it is a great feature and nice of YouTube that it has been implemented to help out VR content creators.

Update 2020/01

The service as been shut down because it wasn’t frequently used. Read more on Google support

Here is the link to my YouTube video about the heatmap case study: https://youtu.be/_Wjw8ixThqw?si=A8ewRAO-WJvabC6q More Comparison